General

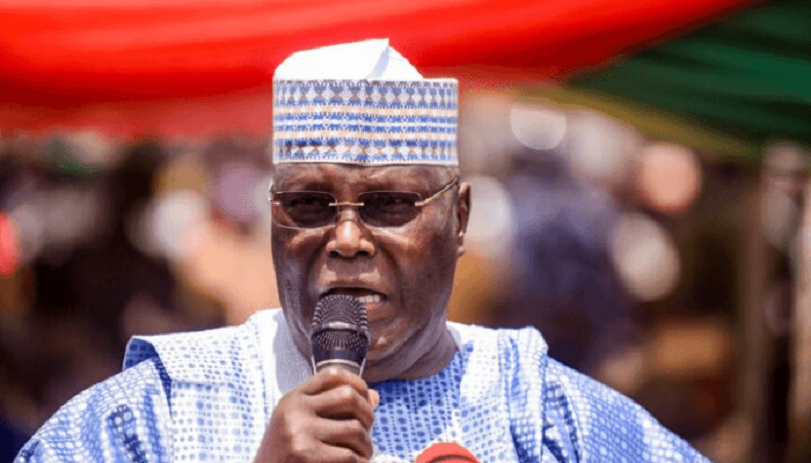

Atiku Faults FG’s Jubilation Over Kebbi Schoolgirls Release

By Adedapo Adesanya

Former Vice President Atiku Abubakar has criticised the federal government over the release of abducted schoolgirls in Kebbi State, saying their freedom should not be presented as an achievement but as evidence of Nigeria’s worsening security environment.

In a statement on Wednesday, Mr Abubakar warned that the return of the schoolgirls was “not a trophy moment” but “a damning reminder that terrorists now operate freely, negotiate openly, and dictate terms while this administration issues press statements to save face.”

President Bola Tinubu had welcomed the release in a statement issued by his spokesperson, Mr Bayo Onanuga, on Tuesday; expressing relief that “all the 24 girls have been accounted for” and commending the security agencies for their efforts.

Also, Mr Onanuga had appeared on Arise Television, saying the effort was part of Mr Tinubu’s government towards securing Nigerians lives.

Mr Abubakar dismissed the narrative as “a shameful attempt to whitewash a national tragedy and dress up government incompetence as heroism.”

“If, as Onanuga claims, the DSS and the military could ‘track’ the kidnappers in real time and ‘made contact’ with them, then the question is simple: Why were these criminals not arrested, neutralised, or dismantled on the spot?

“Why is the government boasting about talking to terrorists instead of eliminating them? Why is kidnapping now reduced to a routine phone call between criminals and state officials?” the former vice president asked.

He added that the administration’s explanation suggests that “terrorists and bandits have become an alternative government, negotiating, collecting ransom, and walking away untouched, while the presidency celebrates their compliance.”

“No serious nation applauds itself for negotiating with terrorists it claims to have under surveillance. No responsible government congratulates itself for allowing abductors to walk back into the forests to kidnap again,” Atiku said.

The abduction occurred on November 17, when armed assailants stormed the Government Girls’ Secondary School in Maga, Kebbi State, killing one staff member and kidnapping 25 students from their dormitory.

One girl escaped shortly after, leaving 24 in captivity until their release on Tuesday.

General

Nigeria Signs Defence Joint Venture with Terra Industries

By Adedapo Adesanya

Nigeria has signed a joint venture with defence technology company, Terra Industries Limited, as part of efforts to boost the country’s defence industrial capacity and advance indigenous high-technology development.

The Defence Industries Corporation of Nigeria (DICON) and Terra signed a Memorandum of Understanding (MoU) for the establishment of the Joint Venture Company (JVC), both parties announced on Monday.

The partnership provides a robust framework for the local production, assembly, research and development (R&D), and training in high-technology systems, including drones, cybersecurity solutions, robotics, and other ancillary software and hardware platforms.

The MoU, executed pursuant to the DICON Act 2023, underscores DICON’s statutory mandate to collaborate with indigenous and foreign defence-related industries through Public-Private Partnerships. Under the agreement, the Joint Venture Company will operate as a subsidiary of DICON, jointly promoted and owned by DICON and Terra Industries, and duly incorporated in Nigeria.

This marks the latest move by Terra, which recently became a $100 million company, following recent raises from investors including Flutterwave CEO, Mr Gbenga Agboola, American actor Jared Leto as well as 8VC founded by the co-founder of Palantir Technologies Inc., Mr Joe Lonsdale. Other investors included Valor Equity Partners, Lux Capital, SV Angel, Leblon Capital GmbH, Silent Ventures LLC, Nova Global.

Terrahaptix, founded by Mr Nathan Nwachukwu and Mr Maxwell Maduka, are using the new funding to expand Terra’s manufacturing capacity as it expands into cross-border security and counter-terrorism.

The latest agreement with DICON is designed to establish advanced production and assembly lines for high-tech equipment within Nigeria, while promoting meaningful technology transfer, skills development, and specialised training for Nigerian personnel.

It also aims to strengthen local sourcing of raw materials, reduce dependence on imports, and enhance domestic industrial capacity and strategic autonomy. Additionally, the partnership will support the supply of security equipment to the wider Nigerian security agencies, other security agencies, positioning Nigeria as a competitive player in the global defence manufacturing sector.

Under the agreement, Terra Industries will provide technical expertise, professional services, and training, and will attract both local and foreign investment to strengthen the defence industrial ecosystem.

The company will also facilitate the procurement of production equipment, coordinate local and international training programmes, and provide access to manufacturing know-how, tooling, spare parts, and established defence sector supply chains.

Speaking on this, Mr Nathaniel Nwachukwu, CEO of Terra Industries, noted that the partnership “Demonstrates confidence in indigenous Nigerian engineering capability and creates a platform for sustainable defence technology development, innovation, and export competitiveness.”

On his part, Major General BI Alaya, the Director General of DICON, described the agreement as “A transformational step toward strengthening Nigeria’s defence manufacturing base, reducing import dependence, and positioning Nigeria as a regional hub for advanced innovation.”

The need for security has risen in recent years, as groups such as Islamic State and al-Qaeda are gaining ground in Africa, converging along a swathe of territory that stretches from Mali to Nigeria.

General

Deep Blue Project: Mobereola Seeks Air Force Support

By Adedapo Adesanya

The Director General of the Nigerian Maritime Administration and Safety Agency (NIMASA), Mr Dayo Mobereola, is seeking enhanced cooperation between the agency and the Nigerian Air Force (NAF) with the aim of strengthening tactical air support within the Deep Blue project.

During a courtesy visit last week, Mr Mobereola told the Chief of Air Staff, Air Marshall S. K. Aneke at the NAF Headquarters in Abuja, that the Air Force was a strategic partner in enhancing maritime security in Nigeria and sustaining the momentum of the Deep Blue Project’s success.

According to the DG, “We are here to seek the Air Force’s support, given the importance of tactical air surveillance to the Deep Blue Project. Nigeria is the only African country with a record of zero piracy within the last 4 years. The Deep Blue Project platforms have been used to achieve zero piracy and sea robberies in the Gulf of Guinea, and we need your collaboration to sustain this momentum”.

He further emphasised that international trade depends on security, which is why vessels prefer to go to or transit through countries where they are secured. “With the traffic we have now, we need to show more security might through collaboration to strengthen our trade viability because of the risks attached to our route. We need these collaborations to sustain what we have achieved so far with the Deep Blue Project”.

The NIMASA DG expressed hope that the collaboration with the Nigeria Air Force will reduce response time.

On his part, the Chief of Air Staff, Air Marshall S.K. Aneke, noted that the Air Force desires to be “a very supportive and collaborative partner with NIMASA and is ready to match the Agency step by step and side by side to achieve the desired results.”

He noted that “collaboration between NIMASA and the Nigerian Air Force under the Deep Blue Project can be strengthened through a joint strategic framework, integrated command structures, and a standing steering committee to ensure shared objectives and accountability.

“Establishing a joint maritime domain awareness fusion cell will enable real-time intelligence sharing, synchronised surveillance, and faster response to maritime threats and ensure sustained operational effectiveness across Nigeria’s territorial waters and exclusive economic zone,” he said, according to a statement.

The Air Force Chief added that the Air Force can also support NIMASA outside the Deep Blue Project operations by providing its own ISR platforms, tactical air support, and rapid airborne deployment for interdictions and search and rescue missions.

While thanking the NIMASA DG for the basic trainings the Agency has provided the aircraft pilots under the Deep Blue Project, Air Marshall Aneke also highlighted areas of operational challenges needing NIMASA’s attention to include bridging the communication gap between NAF operators and NIMASA, higher level and in-depth maintenance trainings, readily available fueling of aircrafts to avoid delays on missions, and provision of flying kits among others.

He therefore pledged the Air Force’s collaboration and assured that the request by NIMASA has been noted and that things will begin to move at thrice its speed going forward.

General

Nigeria’s Democracy Suffocating Under Tinubu—Atiku

By Modupe Gbadeyanka

Former Vice President, Mr Atiku Abubakar, has lambasted the administration of President Bola Tinubu for the turnout at the FCT Area Council elections held last Saturday.

In a statement signed by his Media Office, the Adamawa-born politician claimed that the health of Nigeria’s democracy under the current administration was under threat.

According to him, “When citizens lose faith that their votes matter, democracy begins to die. What we are witnessing is not mere voter apathy. It is a direct consequence of an administration that governs with a chokehold on pluralism. Democracy in Nigeria is being suffocated slowly, steadily, and dangerously.”

He warned that the steady erosion of participatory governance, if left unchecked, could inflict irreversible damage on the democratic fabric painstakingly built over decades.

“A democracy without vibrant opposition, without free political competition, and without public confidence is democracy in name only. If this chokehold is not released, history will record this era as the period when our hard-won freedoms were traded for fear and conformity,” he stressed.

Mr Atiku said the turnout for the poll was below 20 per cent, with the Abuja Municipal Area Council (AMAC) recording 7.8 per cent.

He noted that such civic participation in the nation’s capital, the symbolic heartbeat of the federation, is not accidental, as it is the predictable outcome of a political environment poisoned by intolerance, intimidation, and the systematic weakening of opposition voices.

The presidential candidate of the People’s Democratic Party (PDP) in the 2023 general elections stated that the ruling All Progressives Congress (APC) under Mr Tinubu has pursued a deliberate policy of shrinking democratic space, harassing dissenters, coercing defectors, and fostering a climate where alternative political viewpoints are treated as threats rather than contributions to national development.

He called on opposition parties and democratic forces across the country to urgently close ranks and forge a united front, declaring, “This is no longer about party lines; it is about preserving the Republic. The time to stand together to rescue and rebuild Nigeria is now.”

-

Feature/OPED6 years ago

Feature/OPED6 years agoDavos was Different this year

-

Travel/Tourism10 years ago

Lagos Seals Western Lodge Hotel In Ikorodu

-

Showbiz3 years ago

Showbiz3 years agoEstranged Lover Releases Videos of Empress Njamah Bathing

-

Banking8 years ago

Banking8 years agoSort Codes of GTBank Branches in Nigeria

-

Economy3 years ago

Economy3 years agoSubsidy Removal: CNG at N130 Per Litre Cheaper Than Petrol—IPMAN

-

Banking3 years ago

Banking3 years agoSort Codes of UBA Branches in Nigeria

-

Banking3 years ago

Banking3 years agoFirst Bank Announces Planned Downtime

-

Sports3 years ago

Sports3 years agoHighest Paid Nigerian Footballer – How Much Do Nigerian Footballers Earn