Technology

Understanding Web 3.0: The Next Internet Sensation

By Adedapo Adesanya

The Internet can be said to have started with Web1, as introduced by Tim Berners-Lee, a British scientist who worked at the Organisation Européenne pour la Recherche Nucléaire (CERN) in 1989.

He submitted the proposal for what is known as the Internet today as an effective communication system at CERN and envisioned the Web in three ways: a Web of documents (Web 1.0), a Web of people (Web 2.0) and a Web of data (Web 3.0).

Web 1 is accurately referred to as a “read-only web” as there were very few visuals besides text, and in comparison to today’s Internet (2.0), which allows users to interact with information online, users of Web 1 were more passive and only read things online.

There was no comment section like there is on Twitter or Facebook where users can air their views or share opinions about someone else’s posts or articles like one can do today.

This necessitated the need for Web2, which led to the almost demise of Web1, which brought about the beginning of user-generated content on the web, meaning people could create their own posts or write articles.

Web 2.0 is regarded as the business revolution in the computer industry caused by the move to the Internet as a platform and any attempt to understand the rules for success on that new platform which included building applications that harness network effects to get better more people to use them.

Web2 was truly transformative; it birthed social media networks such as Facebook and Twitter, which have dominated online social interactions to date, cloud computing, e-commerce, and financial services.

Many of the things we enjoy on the Internet today were only possible with the creation of Web 2.

Web 2.0 applications tend to interact much more with the end user. As such, the end-user is not only a user of the application but also a participant using tools including podcasting, blogging, tagging, curating with RSS, social bookmarking, social networking, social media, and web content voting.

But like the law of life says, change is constant – the next big thing now is Web3. web3 jobs are emerging as Web 3.0 aims to make the Internet more inclusive and take control away from big corporations like Facebook, Google, and Amazon. It aims to do this by decentralising the Internet.

So, with Web3, people will be able to control their own data as control from services like Facebook, Google, and others will be replaced with information present on multiple computing devices, acting more like a peer-to-peer internet with no single authority.

Another one of the benefits of Web3 is that it is believed to be able to avoid Internet hacks and leaks as it acts as a system for specific users, meaning there is data security and privacy.

Once it becomes a reality, the virtual world will see resources, applications, and content that is accessible to all.

Web3 has also been noted will create room for the advancement of technologies like cryptocurrencies, virtual reality, automated realities, Non-Fungible Tokens (NFTs), and other digital enhancements.

However, Web 3 has had its critics, with the world’s richest man, Elon Musk, saying the concept is more of a “marketing buzzword” than a reality, while Former Twitter CEO, Jack Dorsey, argued that it would ultimately end up being owned by venture capitalists.

Nevertheless, it never hurts for a full stack developer to take advantage of what Web3 offers.

Technology

Capillary Technologies Acquires SessionM from Mastercard

By Modupe Gbadeyanka

A software product company established in 2012, Capillary Technologies India Limited, has acquired the customer engagement and loyalty company, SessionM, from Mastercard.

This followed a definitive agreement signed by the global leader in AI-powered customer loyalty and engagement solutions with the renowned digital payments firm.

The acquisition of SessionM is the latest in a series of strategic moves by Capillary, following its successful listing on the Indian Stock Exchange in November 2025.

With SessionM in its portfolio, Capillary reinforces its position as a global leader in enterprise loyalty, offering a leading platform to the world’s most sophisticated enterprise brands.

Mastercard has identified Capillary Technologies—consistently recognised as a Leader in The Forrester Wave as the ideal partner to lead SessionM into its next era of growth.

As part of the agreement, a specialised team within SessionM will transition to Capillary, ensuring that the platform’s deep technical expertise is preserved.

SessionM’s esteemed global customer base—which includes Fortune 500 retailers, airlines, and CPG brands—will continue to receive the same high-calibre support and service they experienced before the acquisition.

“M&A has been a key growth strategy for Capillary over the years, and as a public company, we are delivering on that promise to our shareholders and the market.

“By bringing SessionM into our portfolio, we are not just expanding our footprint across the globe; we are further strengthening our loyalty capabilities to deliver one of the industry’s most comprehensive offerings.

“Our mission remains to provide enterprises across industries with specialised, AI-native loyalty technology solutions,” the chief executive of Capillary Technologies, Aneesh Reddy, commented.

Technology

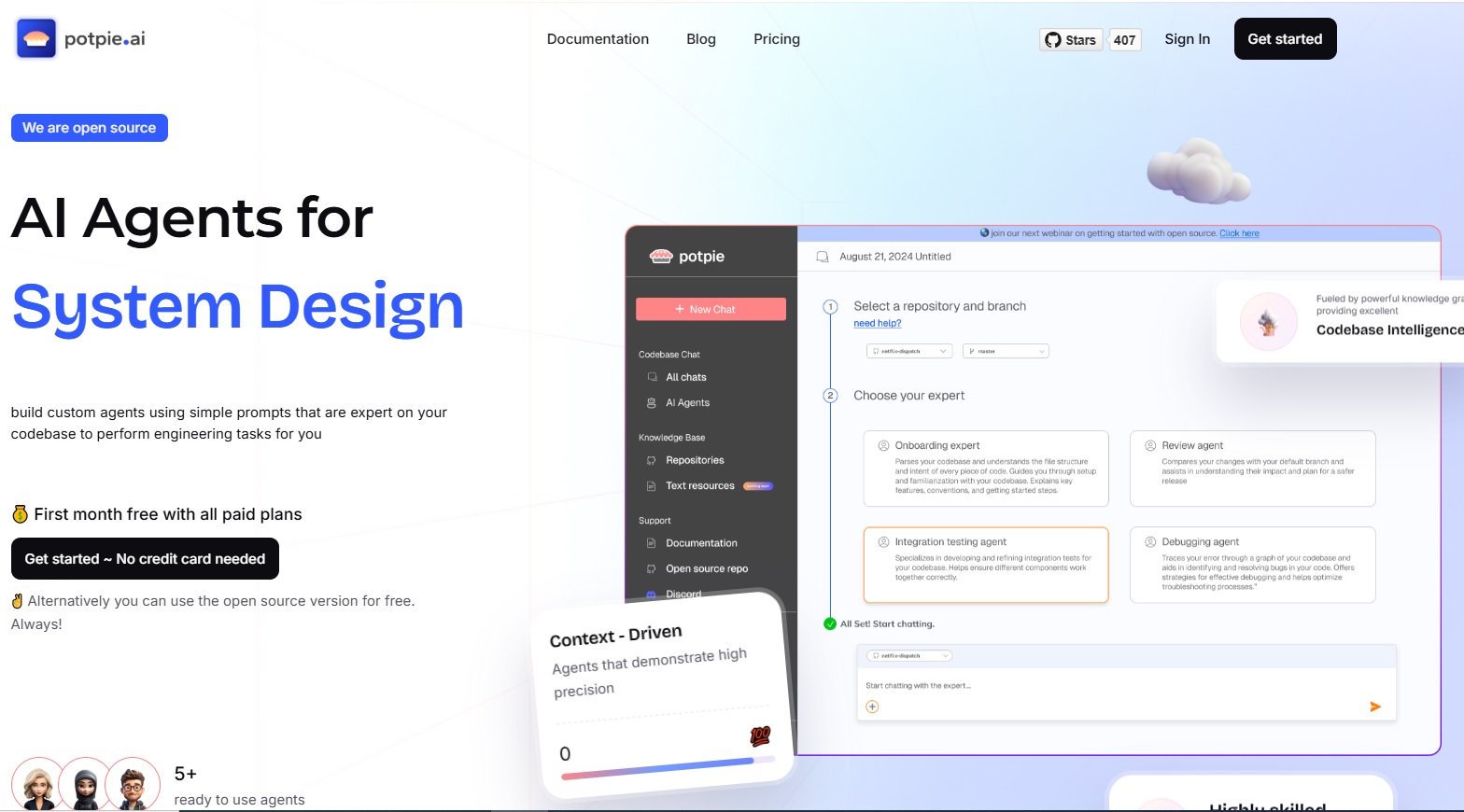

Emergent Ventures, Others Invest $2.2m in Potpie

By Dipo Olowookere

About $2.2 million pre-seed round to help engineering teams unify context across their entire stack and make AI agents genuinely useful in complex software environments has been announced by Potpie.

Potpie was established by Aditi Kothari and Dhiren Mathur, who were determined to unify context across the entire engineering stack and enabling spec driven development.

As generative AI adoption accelerates, most tools focus on surface-level code generation while ignoring the deeper problem of context.

Large language models are powerful, but without access to system-level understanding, tooling history, and architectural intent, they struggle in real production environments.

Traditional approaches rely on senior engineers to manually hold this context together, a model that breaks down at scale and fails when AI agents are introduced.

The platform enables teams to automate high-impact and non-trivial use cases across the software development lifecycle, like debugging cross-service failures, maintaining and writing end-to-end tests, blast radius detection and system design.

It is designed for enterprise companies with large and complex codebases, starting at around one million lines of code and scaling to hundreds of millions.

Rather than acting as another coding assistant, Potpie builds a graphical representation of software systems, infers behaviour and patterns across modules, and creates structured artefacts that allow agents to operate consistently and safely.

A statement made available to Business Post on Monday revealed that the funding support came from Emergent Ventures, All In Capital, DeVC and Point One Capital.

The capital will be used to support early enterprise deployments, expand the engineering team, and continue building Potpie’s core context and agent infrastructure, it was disclosed.

“As AI makes code generation easier, the real challenge shifts to reasoning across massive, interconnected systems. Potpie is our answer to that shift, an ontology-first layer that helps enterprises truly understand and manage their software,” Kothari was quoted as saying in the disclosure.

A Managing Partner at Emergent Ventures, Anupam Rastogi, said, “In large enterprises, the real challenge is not generating code, it is understanding the system deeply enough to change it safely.

“Potpie’s ontology-first architecture, combined with rigorous context curation and spec-driven development, creates a structured model of the entire engineering ecosystem. This allows AI agents to reason across services, dependencies, tickets, and production signals with the clarity of a senior engineer. That is what makes Potpie uniquely capable of solving complex RCA, impact analysis, and high-risk feature work even in codebases exceeding 50 million lines.”

Technology

Expert Reveals Top Cyber Threats Organisations Will Encounter in 2026

By Adedapo Adesanya

Organisations in 2026 face a cybersecurity landscape markedly different from previous years, driven by rapid artificial intelligence adoption, entrenched remote work models, and increasingly interconnected digital systems, with experts warning that these shifts have expanded attack surfaces faster than many security teams can effectively monitor.

According to the World Economic Forum’s Global Cybersecurity Outlook 2026, AI-related vulnerabilities now rank among the most urgent concerns, with 87 per cent of cybersecurity professionals worldwide highlighting them as a top risk.

In a note shared with Business Post, Mr Danny Mitchell, Cybersecurity Writer at Heimdal, said artificial intelligence presents a “category shift” in cyber risk.

“Attackers are manipulating the logic systems that increasingly run critical business processes,” he explained, noting that AI models controlling loan decisions or infrastructure have become high-value targets. Machine learning systems can be poisoned with corrupted training data or manipulated through adversarial inputs, often without immediate detection.

Mr Mitchell also warned that AI-powered phishing and fraud are growing more sophisticated. Deepfake technology and advanced language models now produce convincing emails, voice calls and videos that evade traditional detection.

“The sophistication of modern phishing means organisations can no longer rely solely on employee awareness training,” he said, urging multi-channel verification for sensitive transactions.

Supply chain vulnerabilities remain another major threat. Modern software ecosystems rely on numerous vendors and open-source components, each representing a potential entry point.

“Most organisations lack complete visibility into their software supply chain,” Mr Mitchell said, adding that attackers frequently exploit trusted vendors or update mechanisms to bypass perimeter defences.

Meanwhile, unpatched software vulnerabilities continue to expose organisations to risk, as attackers use automated tools to scan for weaknesses within hours of public disclosure. Legacy systems and critical infrastructure are especially difficult to secure.

Ransomware operations have also evolved, with criminals spending weeks inside networks before launching attacks.

“Modern ransomware operations function like businesses,” Mitchell observed, employing double extortion tactics to maximise pressure on victims.

Mr Mitchell concluded that the common thread across 2026 threats is complexity, noting that organisations need to abandon the idea that they can defend against everything equally, as this approach spreads resources too thin and leaves critical assets exposed.

“You cannot protect what you don’t know exists,” he said, urging organisations to prioritise visibility, map dependencies, and focus resources on the most critical assets.

-

Feature/OPED6 years ago

Feature/OPED6 years agoDavos was Different this year

-

Travel/Tourism10 years ago

Lagos Seals Western Lodge Hotel In Ikorodu

-

Showbiz3 years ago

Showbiz3 years agoEstranged Lover Releases Videos of Empress Njamah Bathing

-

Banking8 years ago

Banking8 years agoSort Codes of GTBank Branches in Nigeria

-

Economy3 years ago

Economy3 years agoSubsidy Removal: CNG at N130 Per Litre Cheaper Than Petrol—IPMAN

-

Banking3 years ago

Banking3 years agoSort Codes of UBA Branches in Nigeria

-

Banking3 years ago

Banking3 years agoFirst Bank Announces Planned Downtime

-

Sports3 years ago

Sports3 years agoHighest Paid Nigerian Footballer – How Much Do Nigerian Footballers Earn